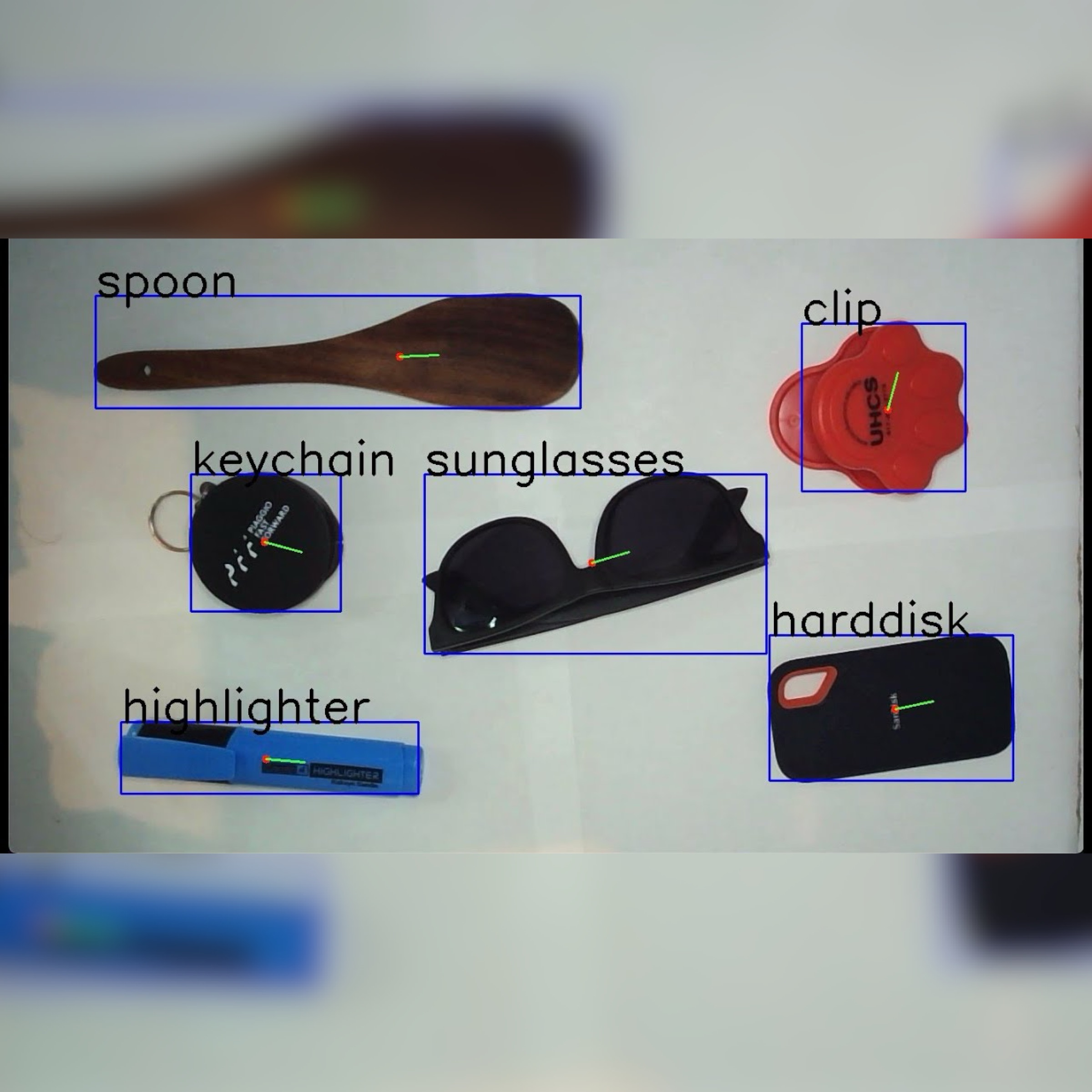

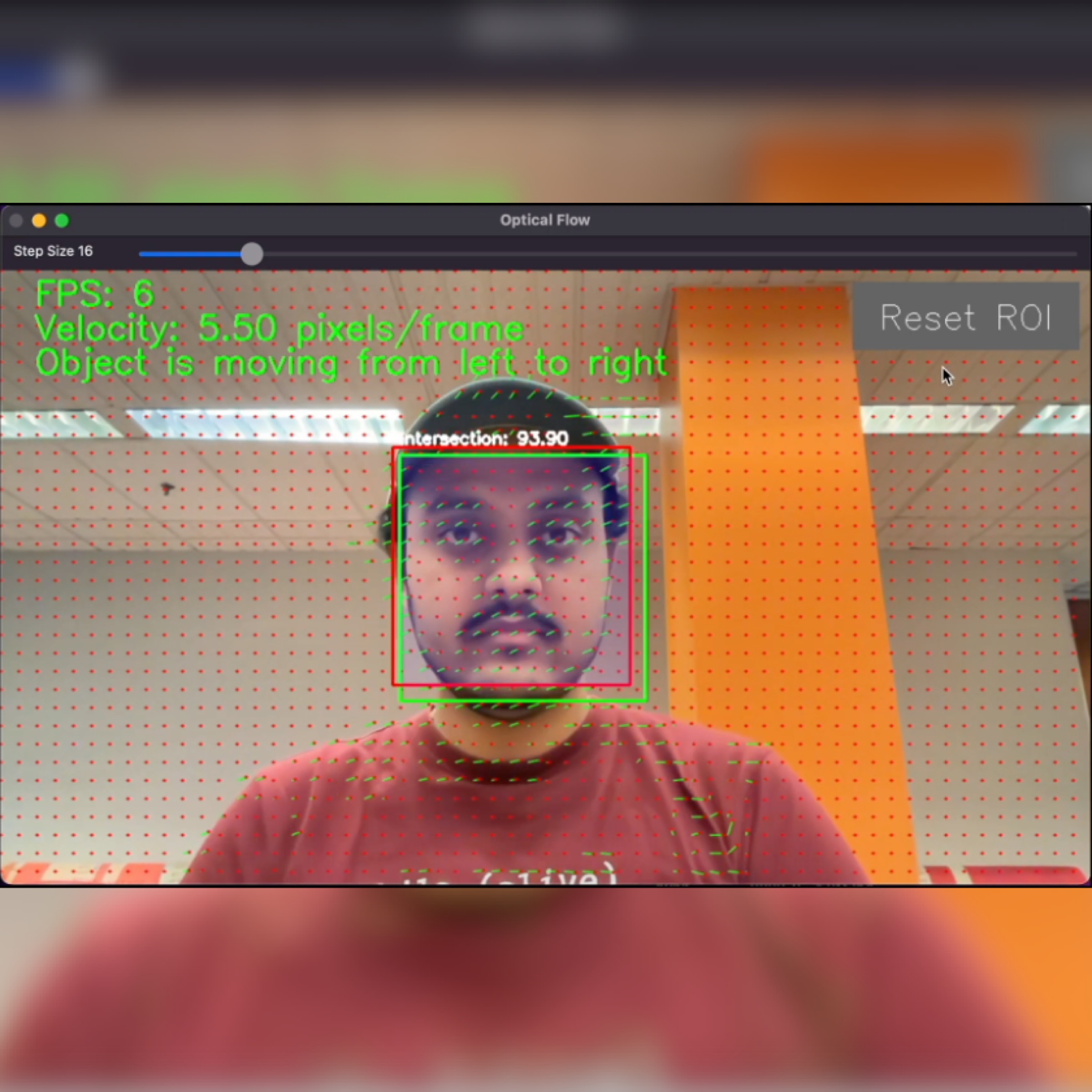

Realtime 2D Object Detection

A Robust Object Classification System that processes video frames to classify 15 different objects from a live webcam video stream. Leverages K-Nearest Neighbor Classification to achieve 95% accuracy in object classification.

C++, OpenCV, XCode